Automations with AI?

5 Nov 2025 · 📖 in 10 minutes Hacking OddBox to build a recipe suggestion workflow powered by n8n and local ollamaEarlier this year I added a Raspberry Pi to my cluster (a simple docker swarm with 4 other Raspberry Pi). I added the new Pi 5 to start messing around with LLMs and AI workloads. Initial testing wasn't great, the Pi 5 was capable of running the models but the tokens / second were shockingly low for "chat" type use cases. It was a nice idea but it wasn't practical.

What about use cases where we don't need immediate responses?

Odd boxes and random recipes

Every week we get an Oddbox which provides a bunch of interesting, seasonal, fruits and veg. We like to cook each evening but we don't enjoy having to decide what to cook following a busy day at work.

The current workflow is something like:

- open the fridge

- look at ingredients

- open pinterest

- pick a recipe based on pictures

- read a life story

- cook food

This works well enough but it requires that we have all the necessary ingredients in already (that or substitute). What if we could get the contents of the box ahead of time and then plan meals accordingly?

A new workflow might be like this:

- get the contents of the upcoming box (available every Tuesday on the Oddbox website)

- find recipes that we can make given the box (and additional ingredients)

- ...

- profit?

No API, no problem!

⚠️ Disclaimer, the below is purely for educational purposes and the approach could violate the Terms of Service

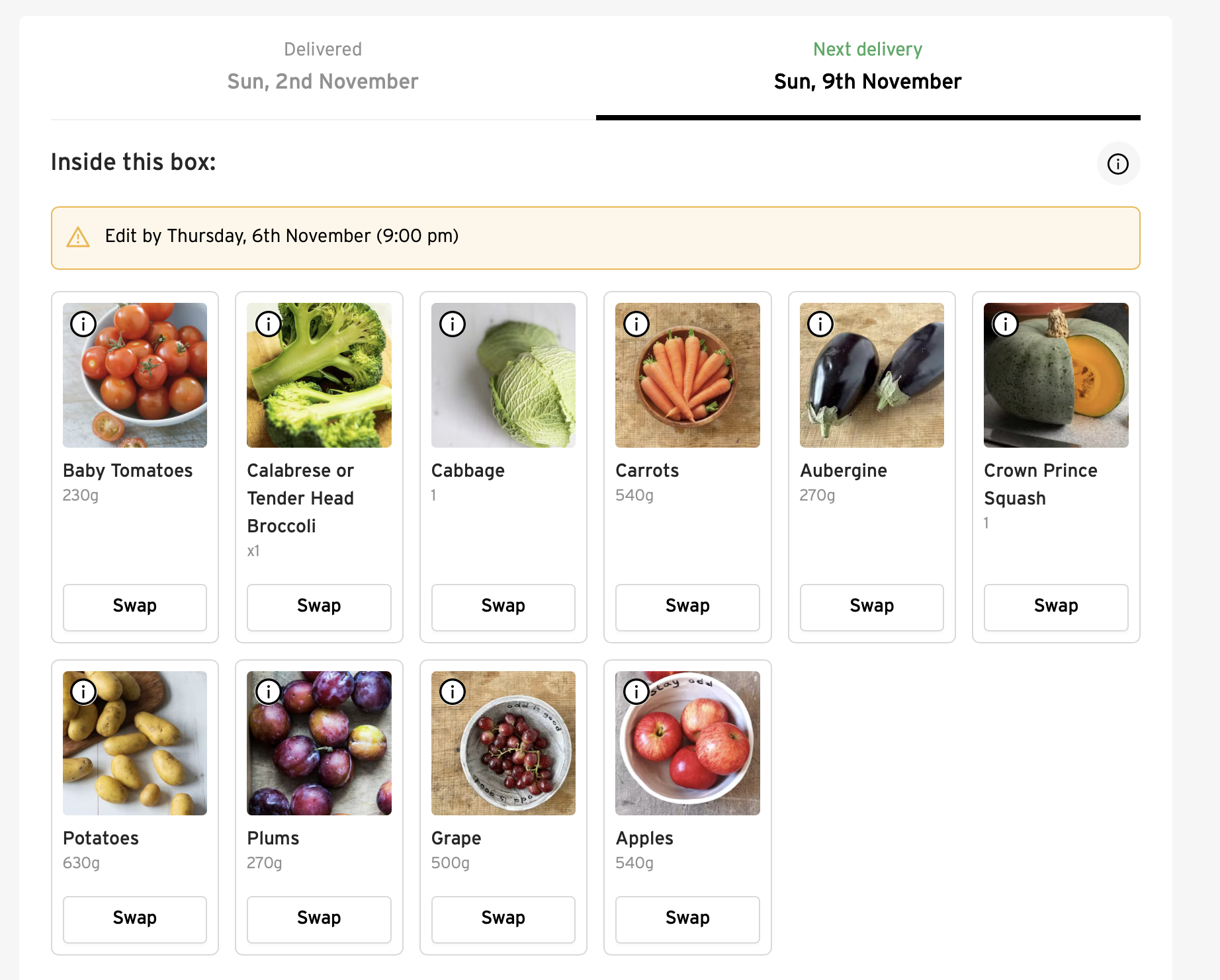

I spent a little bit of time trying to understand how the contents of this page are populated:

There appears to be no official API that I could use to get details of my box. Also, perhaps unsurprisingly, the whole page is rendered in JavaScript so, no HTML scraping will help here.

Running a headless browser and operating with Playwright might be an option but it feels like overkill and, after having a quick look at the network tab, it seems like there's a couple of main calls.

In short the calls that are needed are for: auth tokens (in the form of JWT with access / refresh and id), a subscription (for a box id and a delivery id), and finally box details (this is technical a customization call as it's the "Manage Box" part of the app).

The exact implementation of this on my end isn't pretty, the URLs should come from the JavaScript bundle but I've not done that and just grabbed the cURL for the 3x URLs from the the network tab and executed from the command line to make sure they still work

At some point I'll need to remove the hardcoding and take the URLs from the bundle directly as it's pretty fragile right now as there's no guarantee they'll stay the same.

The end result of all this is an series of API calls that will return a response that I can use to grab a list of ingredients that will be in my box e.g. baby tomatoes, broccoli, cabbage, carrots etc.

n8n m8

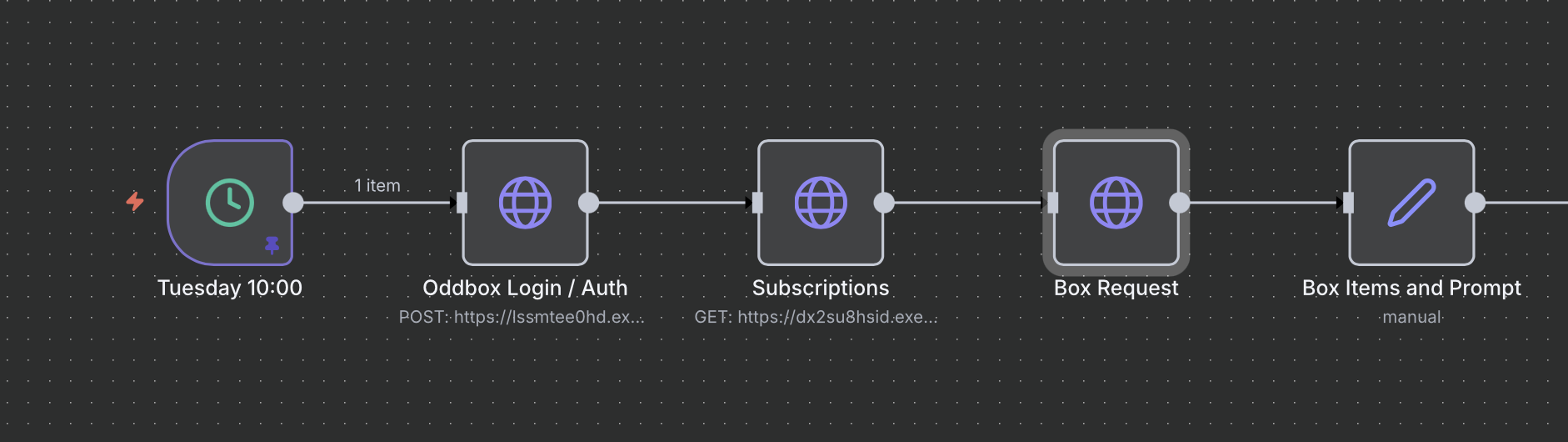

Next up is writing a workflow that will be able to make the above calls and return the ingredients list for use somewhere.

I wanted to have a play with n8n.io, self describing as "the practical way to make a business impact with AI", as it's perfect for this kind of project where I'll sprinkle in some AI to generate recipes.

The n8n platform itself is a "no-code" workflow builder (though I ended up writing a decent amount of JavaScript as you can see below) that has many, many extensions to external apps and user templates that you can add / buy.

There's a lot to figure out and plenty of documentation to get started.

After a bit of trial and error I have a flow that outputs the contents of the box using a HTTP Request module which I can copy/paste the cURLs directly and then update as needed:

The setup of the flow is pretty nice and there's a helpful {{ $json .. }} command that you can use to manipulate input data with JavaScript. You can see this below where I've pulled out the specific box for my subscription and then mapped through each entry to pull out the name of the ingredient.

This first part of the workflow is useful and, to be honest, we could stop there and just have a message with the ingredients sent to my phone each Sunday when we, aspirationally, do our meal prep.

But let's see what fun we can have.

O llama, how art thou?

As mentioned at the top my Pi 5 powered Ollama instance runs LLMs fairly slowly but this is perhaps ok. Given this only needs to run once a week it can take as long as it needs to.

I looked into adding Claude / ChatGPT but each required a credit card and the whole point of setting up the Pi 5 was to run LLMs for exactly these kinds of experiments

My initial tests with this was to generate a recipe with the ingredients, the prompt for this is below:

| Prompt v1 |

|---|

You are a top chef who can easily make the most complex meals, today you're being asked to work on a series of dishes for 5 days worth of meals that include: {{ $json.list.filter(item => item.box_id == $('Subscriptions').item.json.subscription_list[0].subscription.cf_box_id ).first().default_produce.map(item => item.name) }}. Each meal must have a decent carbohydrate, protein and vegetable and should portion out for 4 people. Feel free to pull in additional ingredients but use items that would be typically available in the UK shop Tesco. Please explain the theme for the recipe and any facts about the ingredients that are pertinent to the meal. List all steps needed to complete and plate the meal to the finest standard with garnish / herbs. |

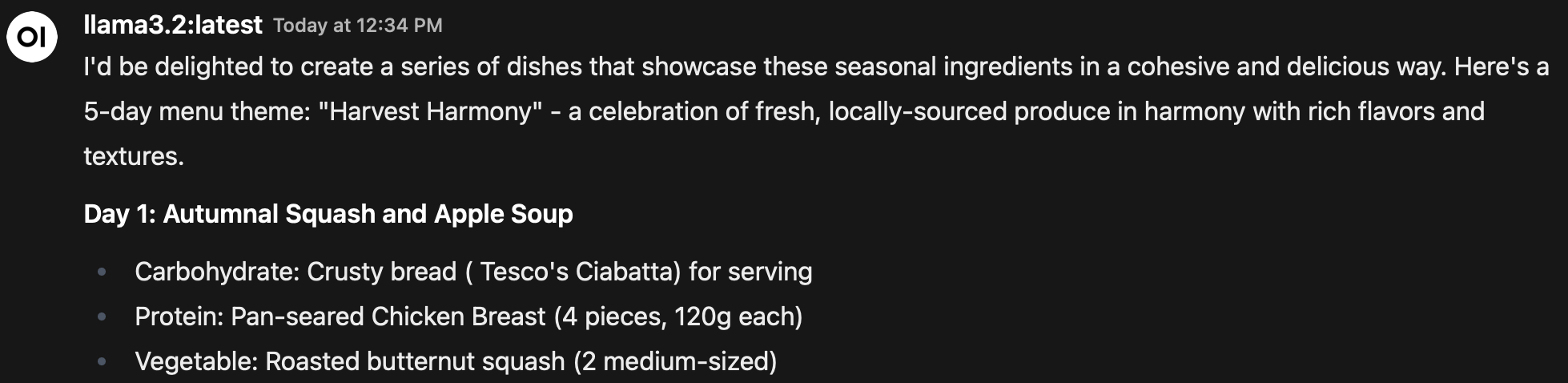

The result is what you might imagine, a nice long, wordy, set of recipes - not unlike those you'd get from the web. It's a good start but not ideal.

The best response from the LLM would be some structured data like JSON / YAML so I spent a bit of time working, and reworking, the prompt until the output was consistently JSON and somewhat sane. Things that helped with getting a more consistent response was a big block of examples and a schema. I figured Typescript would be a good way to describe the shape of the response so there's a block in my prompt which looks like this:

| Prompt formatting snippet |

|---|

| you must return the response data as a JSON object with the structure as defined by a typescript type type Recipe = { name: string // e.g. butternut soup ingredients: Array description: string // e.g. this is a hearty soup steps: Array flavour_text: string // Any interesting fact about an ingredient / origin of the dish } type Response = { days: Recipe[] message: string // e.g here's your suggestions you'll need some additional ingredients additional_ingredients: Array } I expect a { days: ..., message: ...} object as a result, do NOT return the typescript, do not format with backticks, the response should only be returned as a valid JSON object. |

Despite quite clear instructions about "valid JSON" the LLM would still return markdown and special characters (e.g ” the "curly quotation mark") in the response - this means an unexpected quote characters used in the JSON will break parsing. Another thing that was super helpful here was that n8n appears to understand typical LLM responses so a couple of helpers like replaceSpecialCharacters() and removeMarkdown() which I've used liberally to clean the LLM output.

The result, it's not fast ~5-10minutes per prompt which generates 5 recipes and a treat, below is the "sweet treat":

So while I can't chat with the LLM, I can prompt the LLM and come back to it later. The model I used to generate this was llama3.2:3b. There are, of course, countless other models but in my testing the smaller models tended to miss the brief somewhat, for example "Baby Tomato & Vegetable Tagliatelle with Roasted Carrot & Potato" which starts strong but, potato and pasta?

I've used the signal-cli-rest-api contianer that I have running in my cluster to fire off a series of messages with each recipe.

That's a wrap

A pretty nice example of what's possible with a few tools and both n8n and ollama would probably run just fine on my laptop too!

Reverse engineering the OddBox API was fun but I'd much prefer a proper public API (or even an MCP server) but the n8n workflow made slotting together each of calls super easy. The API calls are fragile and will break in the future but maintaining it isn't high on my list, so let's see how useful this is and maybe I'll revisit.

One way I've been thinking about this is to use the n8n workflows as a test bed for things that might be useful and then "productionise" later if they're useful by writing some rust, golang, etc as and when. Assuming there's a benefit to having a dedicated container running a specific workflow.

Thanks for reading this far and share your favourite n8n templates with me over on Mastodon.